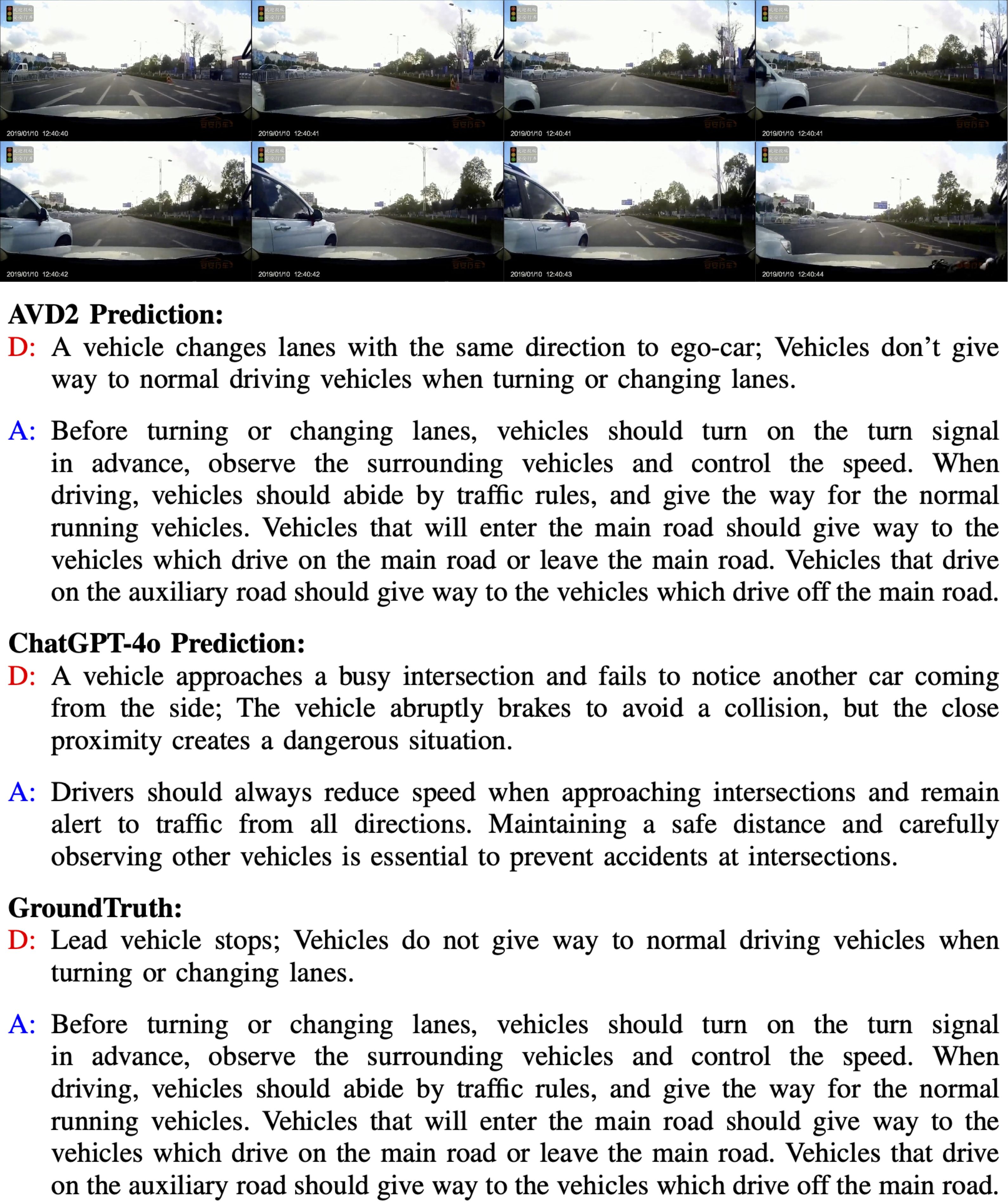

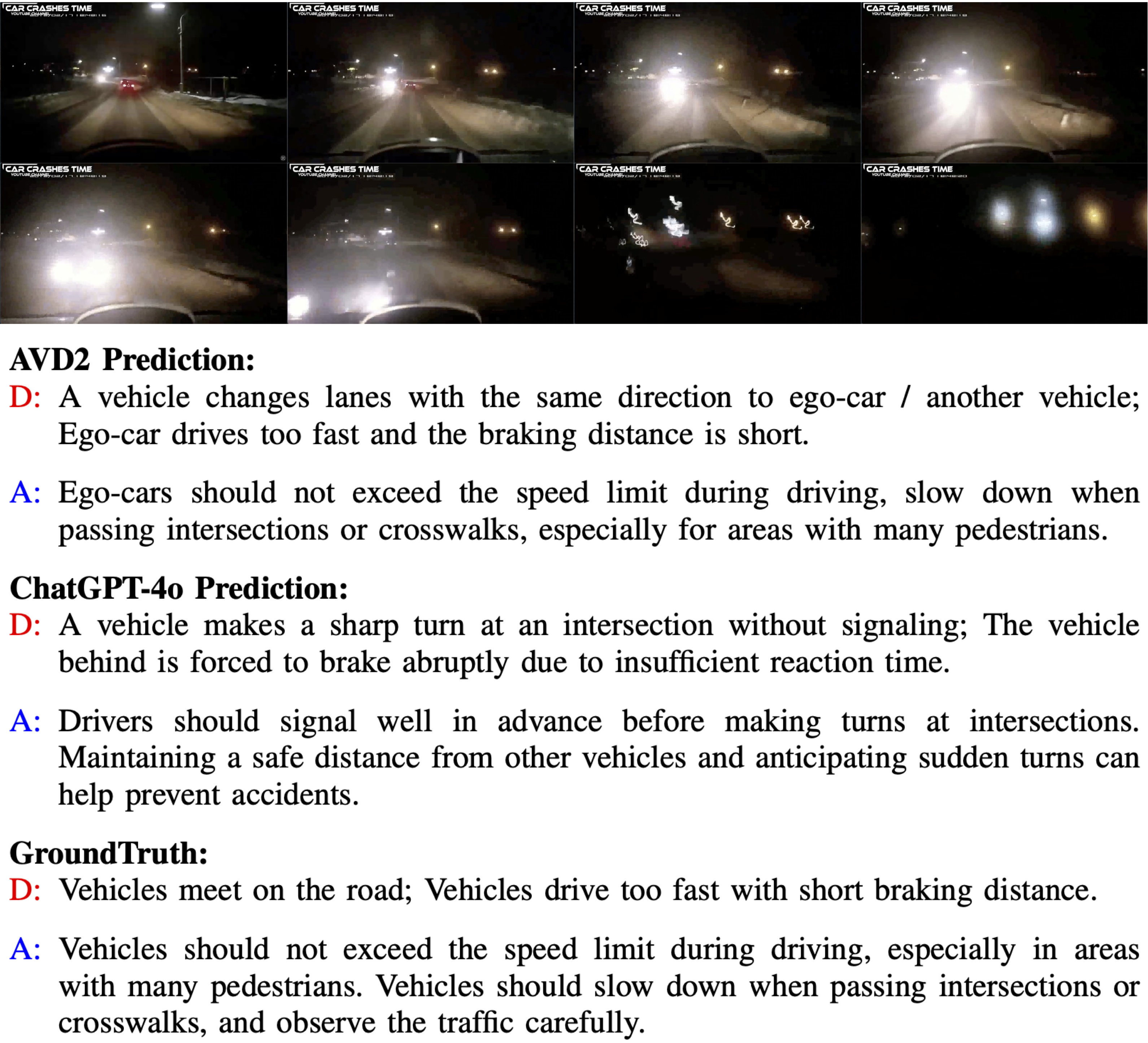

Traffic accidents present complex challenges for autonomous driving, often featuring unpredictable scenarios that hinder accurate system interpretation and responses. Nonetheless, prevailing methodologies fall short in elucidating the causes of accidents and proposing preventive measures due to the paucity of training data specific to accident scenarios. In this work, we introduce AVD2 (Accident Video Diffusion for Accident Video Description), a novel framework that enhances accident scene understanding by generating accident videos that aligned with detailed natural language descriptions and reasoning, resulting in the contributed EMM-AU (Enhanced Multi-Modal Accident Video Understanding) dataset. Empirical results reveal that the integration of the EMM-AU dataset establishes state-of-the-art performance across both automated metrics and human evaluations, markedly advancing the domains of accident analysis and prevention.

@article{arxiv:2502.14801,

author = {Cheng Li, Keyuan Zhou, Tong Liu, Yu Wang, Mingqiao Zhuang, Huan-ang Gao, Bu Jin, Hao Zhao},

title = {AVD2: Accident Video Diffusion for Accident Video Description},

journal = {arXiv:2502.14801},

year = {2025},

url = {https://doi.org/10.48550/arXiv.2502.14801},

}